Virtual Representations of Real Locations:

Using emerging animation technologies for environmental awareness

This page contains slides and text prepared for the Society for Animation Studies annual conference at Rowan University.

Dr Gina Moore, June 2023.

Macquarie Island

Officially part of Australia, Macquarie Island is situated approximately halfway between the southern state of Tasmania and Antarctica.

Below are some photos from the Australian Antarctice Division website. They show some of Macquarie Island's inhabitants, including scientists who work there.

Photographs sourced from Australian Abtarctic Division website

.

Virtual Macquarie Island

I've recently been working on a project called Virtual Macquarie Island, or VMI. It's a representation of Macquarie Island made using 3D animation, and game engine software. Specifically, we have used Maya by Autodesk, Houdini by Sidefx Software, and Unreal Engine by Epic Games.There are several people involved in VMI, but most of the content so far has been created by myself, and Peter Morse, with elephant seals by Jack Cornish. Peter started the project in 2020 and approached me in 2021 To create vegetation and wildlife, I also ended up working on the terrain. Peter has a background in geophysical representation and experience in film making. My background is in fine arts. I was a painter and sculptor before moving into 3D animation in 2001.

The movie above shows excerpts from an 18 minute educational film which is on display at the Tasmanian Museum and Art Gallery. the film is basically a flythrough; the full version has music and a voice over. VMI is an ongoing project. Having completed this linear film, our next goal is to create an interactive and immersive version, which can be experienced through a VR headset.

Macquarie Island is an important breeding ground for a variety of animals including wandering albatross, elephant seals, fur seals, king penguins, and royal penguins. Hurd Point is at the south end of the island and has the largest penguin colony in the world (around 500,000 pairs).

Macquarie Island is a World Heritage site and not accessible without a permit. Peter and I haven't yet been able to visit. Instead, we have been studying maps, watching films, and looking at photos. We know that visiting the island would be a rich sensory experience and our work could never capture the sights, sounds, and smells of the real place. No representation can match the real thing; not a photo, or a film, and certainly not a 3D animation.

So the obvious question is, why build a virtual version of a real location? It sounds like a futile exercise with little room for creativity. My presentation today is structured as 3 answers to this excellent question

Why build a virtual version of a real location?

Answer 1. To encourage an appreciation of a real location by giving viewers a new understanding or a shift in perspective.

My first answer is to encourage an appreciation of a real location by giving viewers a new understanding or a shift in perspective.

Relevant work - shifts in perspective and VR

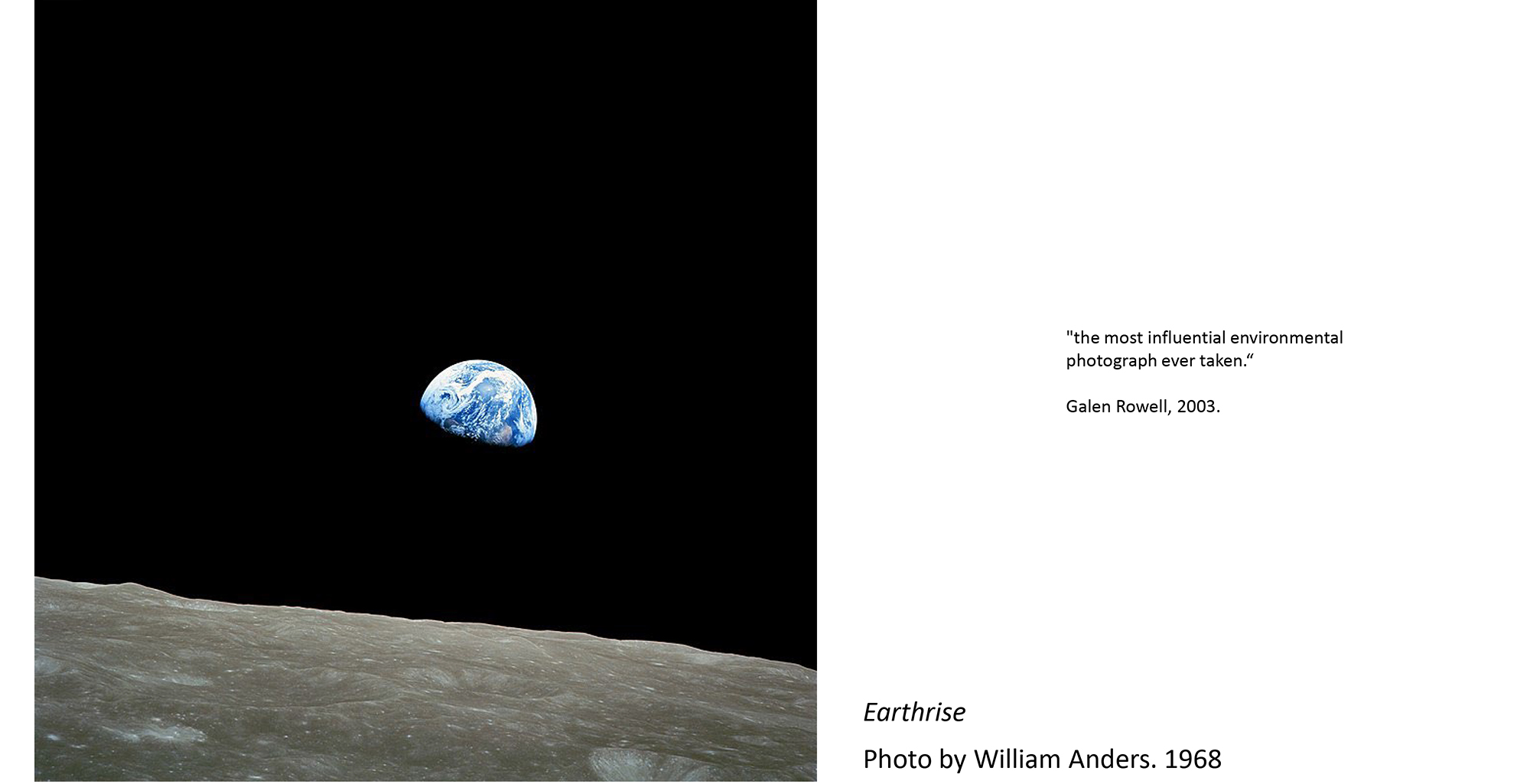

An example of a perspective shift is the iconic photo, "Earthrise", taken more than 50 years ago by astronauts orbiting the moon. At this moment in history, technologies of space travel and photography allowed them to capture a new viewpoint, looking back at our planet from space. This photo apparently gave viewers a sense of Earth's fragility and has been called "the most influential environmental photograph ever taken" (Life Books, 2003).

.

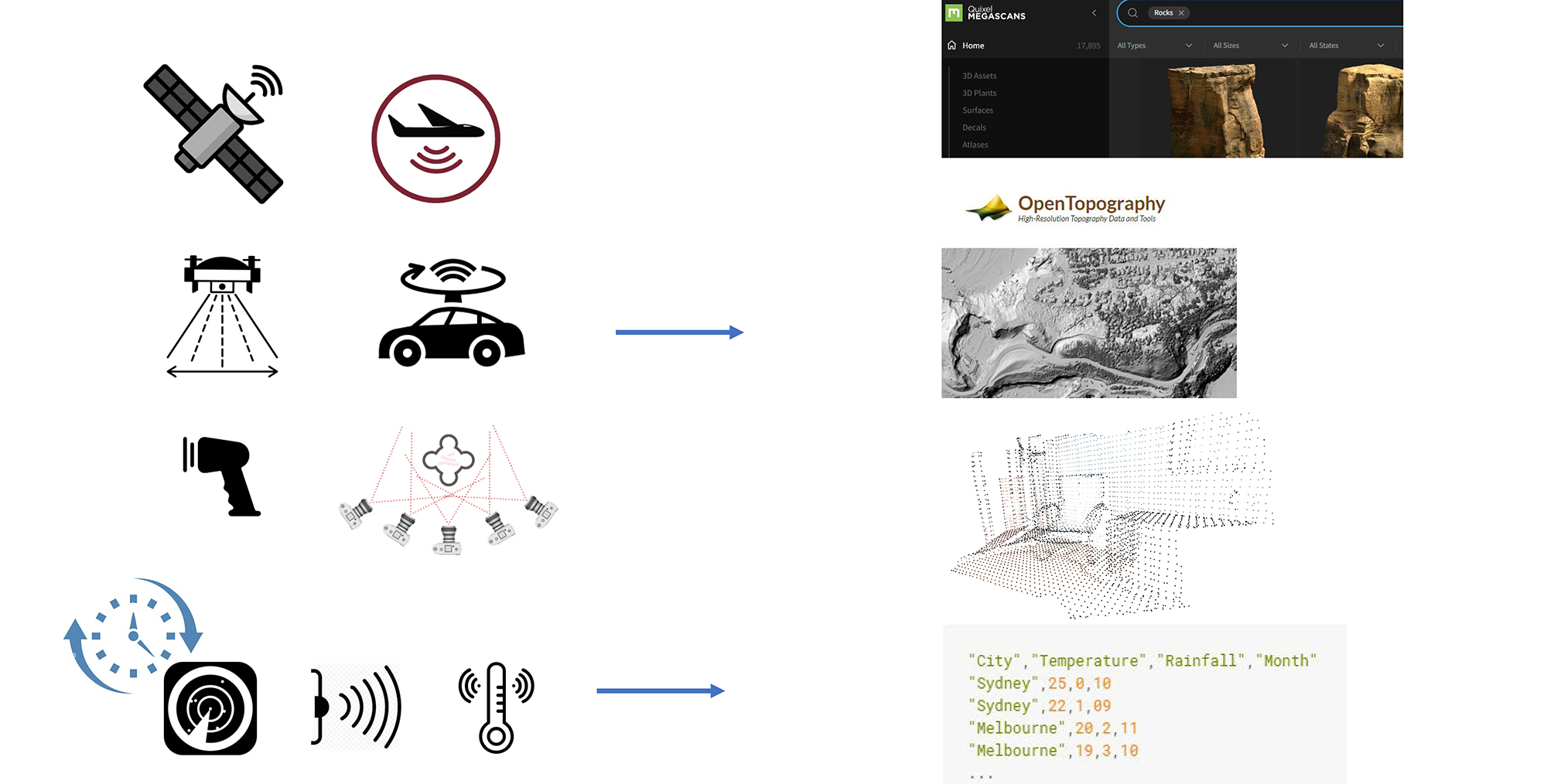

Today, more than 50 years later, our technological landscape is very different. As well as advances in computer graphics (including realtime animation, VR, AI, etc), changes relevant to animators include the proliferation of reality capture devices including satellites scanners, cameras, and sensors. These technologies sample physical objects and convert them into data. Animators encounter this data in various formats including images, surfaces, point clouds, and other realtime formats.

Diagram of reality capture devices. Sources include Open Topography and Quixel Megascans

Created by Marshmallow Laser Feast in 2020, "The Eyes of the Animal" is a VR artwork featuring point clouds of a forest. It uses the fluid point of view enabled by VR to give viewers an animal's perspective. VMI also aims to incorporate a fluid perspective, including shifts in scale, and style of locomotion. It's our hope that future viewers of VMI will be able to see through the eyes of a penguin, fly like an albatross, or swim with fish and seals.

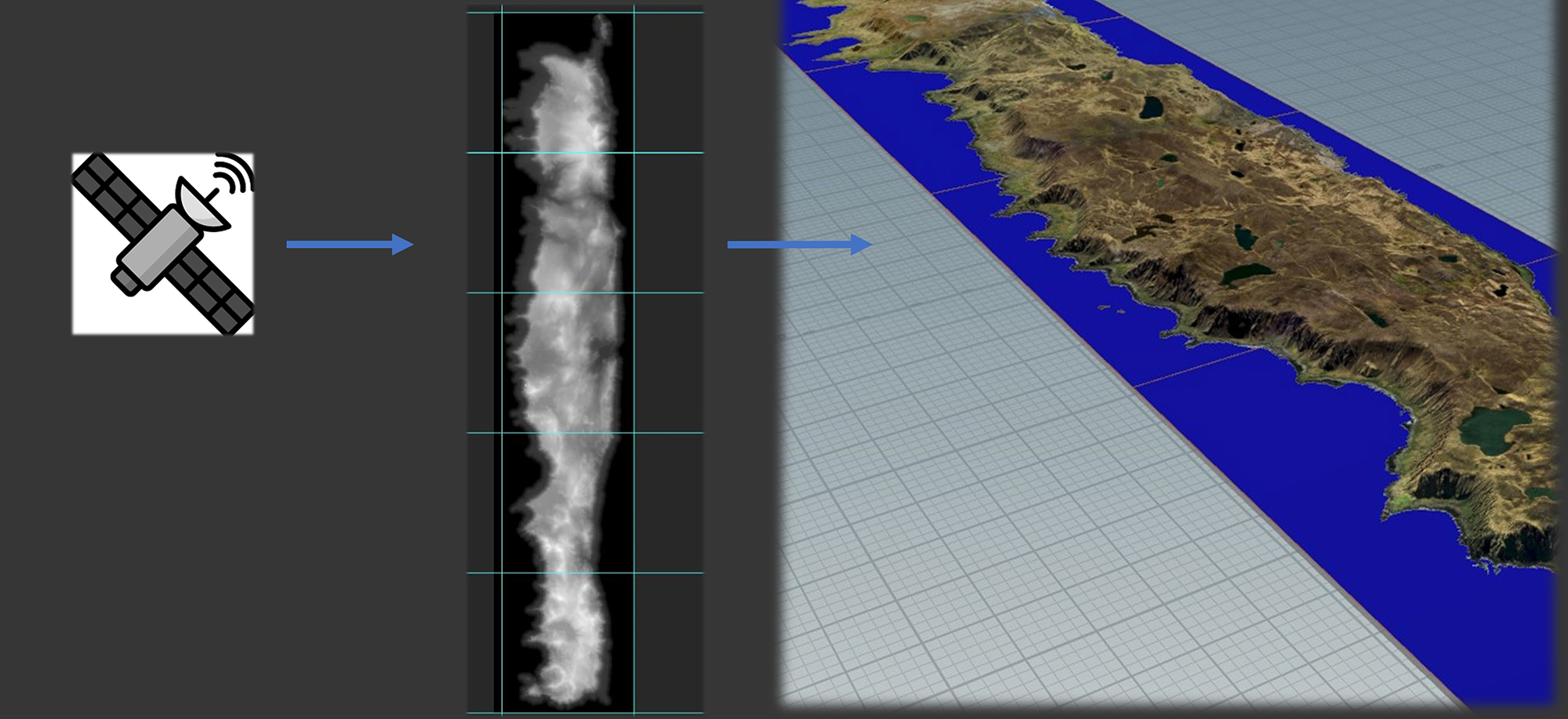

Our first iteration of VMI uses satellite data to generate a basic terrain. In future iterations, we plan to incorporate other data inputs. For example, near real-time satellite data could drive animal numbers and placement.

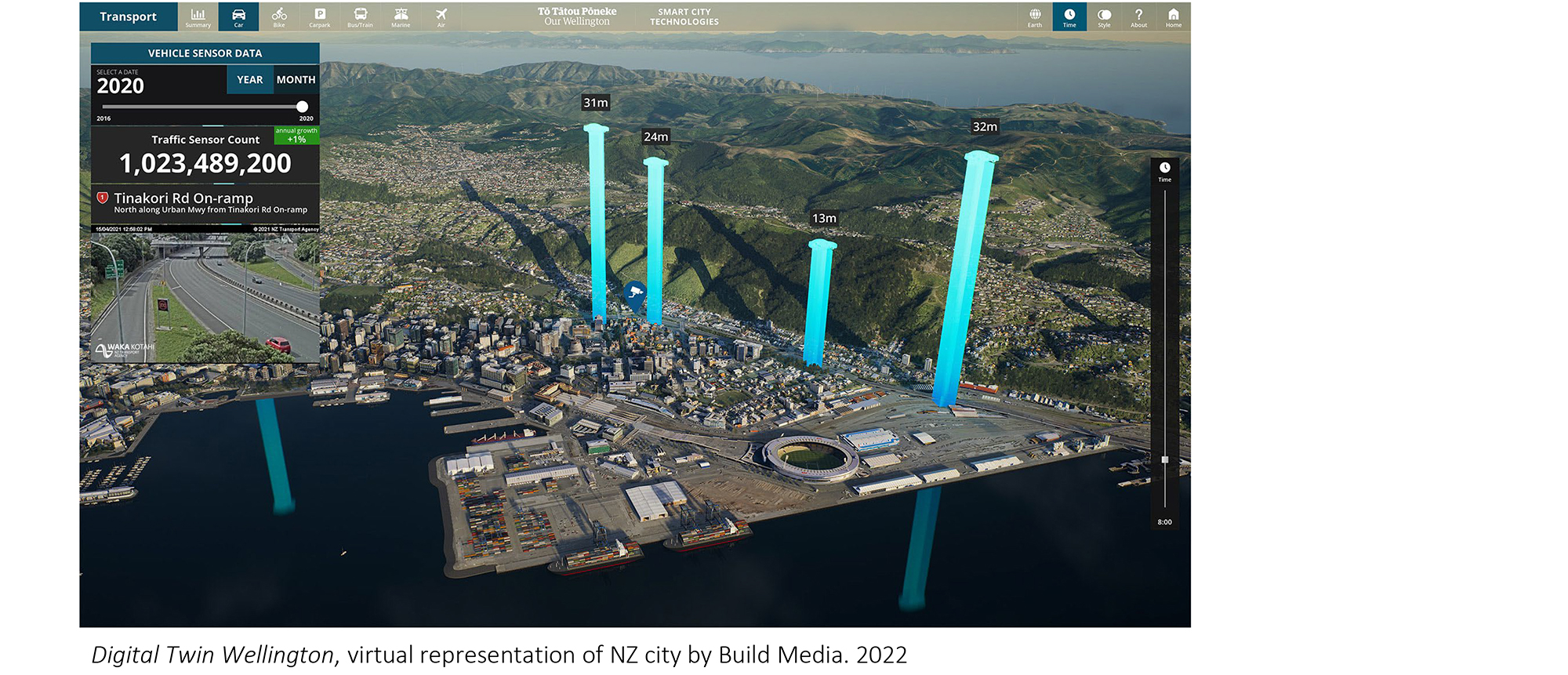

Relevant work - new understandings and digital twins

A Virtual representation which continually updates to reflect the state of a physical object or place, is often referred to as a digital twin. Below is a screenshot from Digital Twin Wellington created by Build Media in 2022. Digital Twin Wellington is a virtual model of the NZ city. It uses realtime data to provide transportation statistics including bus, rail, ferry, bike, car, and air traffic, as well as car parking availability. Like other digital twins, it is used for communicating with stakeholder groups, understanding trends, and testing future scenarios. Similarly, there will be opportunities for VMI to incorporate statistics about wildlife, vegetation and geology using realtime sensors and observations manually recorded by scientists. As well as appealing to the public, VMI can be used for science communication, training, and analysis.

.

Relevant work - Proceduralism

Future VMI viewers will be free to explore the island, observing it from a distance and up close. This freedom means we need to generate many detailed objects. In response to similar needs, game designers use procedural techniques to create and distribute assets via a designed set of rules, rather than manual manipulation. ,No Man's Sky, created by Hello Games in 2016, was one of the first games to rely extensively on procedural generation (Morin, 2016).

.

Proceduralism in VMI

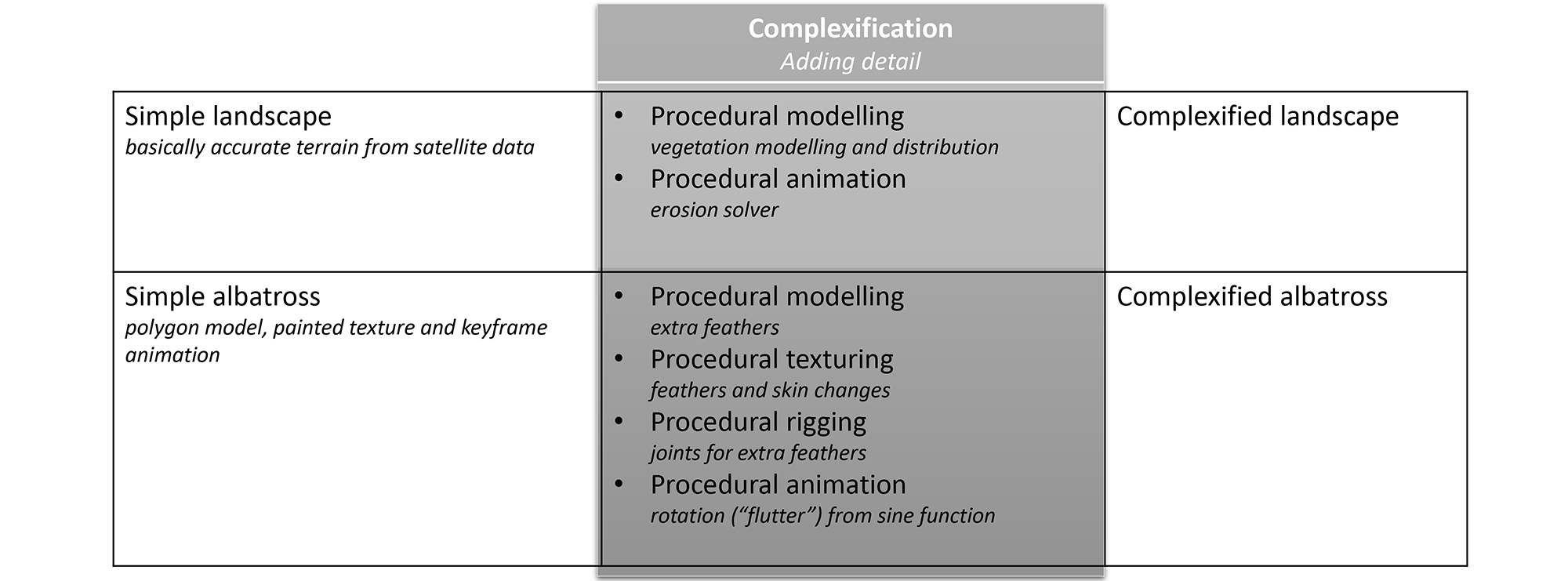

I'll now give an overview of proceduralism in VMI. Specifically, I'll describe workflows used for the landscape and the wandering albatross. In both cases, proceduralism is used to take a simple input and making it more complex or detailed.

.

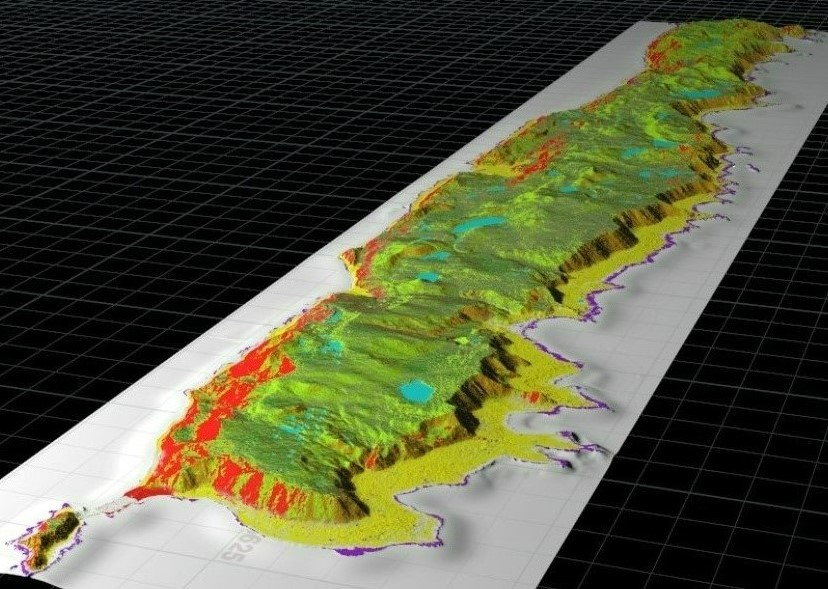

Terrain - Basic surface from satelite data

As mentioned earlier, the VMI terrain was created using satellite data. From the data we produced a black and white image. From the image, we created a "Heightfield" surface in Houdini. This surface was basically accurate and it looked ok from a distance, but it didn't look good up close thanks to sparse sampling and data glitches.Terrain - Detail with procedural noise

I smoothed the surface using a combination of image blur in Photoshap and Heightfield smoothing techniques in Houdini. This reduced glitches but the surface now lacked detail. I reintroduced detail using various procedural surfacing techniques including Heightfield noise.

Terrain - selection with procedural masks

Key to this workflow, is the ability to apply noise selectively and for this I used procedural masks. The movie below indicates how masks (shown in red) are defined using parameters such as, slope, height, and direction.Terrain - parametric plants distributed via procedural masks

To accurately scatter vegetation, I found we could generate masks that resemble botanical maps of the Island like those shown in this book. The resemblance between procedural masks and vegetation maps isn't surprising because plant distribution follows similar rules. The plant models are procedural (or parametric), so we can change their form according to their location on the terrain.

.

Terrain - Erosion simulation for fine detail

I added fine detail to the terrain using Houdini's erosion solver which produces an animated, or time-based, simulation. Attributes created by the solver were used during texturing. This is interesting because, we often think of 3D modelling, texturing, and animation as separate processes. But in many contemporary workflows the distinction between them is unclear. As 3D animation evolves, I think we need new understandings of what it is, and what it can do. This leads me to my second answer to the key question.Why build a virtual version of a real location?

Answer 2. To appreciate 3D Animation as data manipulation.

Why build a virtual version of a real location? because the process helps us appreciate 3D as a type of data manipulation.

This sounds incredibly dry, but it doesn't have to be because appreciating data, rather than the user interface, as our medium encourages experimentation; it can lead to novel outcomes, and new methodologies.

Wandering Albatross - traditional 3D character workflow

To create the Wandering Albatross, I started with an orthodox 3D character workflow. This means that I modelled the bird in a default pose; I laid out the UVs and painted a texture; I added joints to deform the mesh; and controls to manipulate the joints. I then created key poses throughout the timeline, allowing the software to interpolate between them.Notice that describing the workflow like this -modelling > texturing > rigging > and animation- foregrounds the resemblance between 3D and stop motion animation, where movement is added to a lifeless mannequin. In truth, the resemblance between these analogue and digital processes is limited. For a start, the digital process is more modular than linear. This means there's more iteration, more backwards and forwards, than the movie above suggests. I'll now describe how I used proceduralism to complexify this basic character and ended up with a new bird methodology.

Wandering Albatross - procedural modelling and texturing of flight and covert feathers

The movie below shows a top view of the basic bird imported into Houdini where I used procedural tools to rebuild the flight feathers and add more by interpolating between the originals. Studying albatross photos, I noticed that flight feathers change length and bend across the wing, and that that they are dark with some white bits close to the body.Whether distributing plants across a landscape, or feathers across a bird's wing, the process is basically the same. It involves observing rules or patterns in nature, and building procedural systems to implement these rules.

Using similar techniques, I created covert feathers below and above the flight feathers. I noticed that the upper coverts have a distinctive pattern which I created using procedural noise, remapping the noise values along two axes and adding some variation.

To better hide the juncture between feathers and body, I made procedural adjustments to the body texture by adding point colour using the same noise and attribute transfer. I also added some tail and body feathers to break up the silhouette.

Wandering Albatross - procedural joints moving

Geometry used to create the feathers was also used to create joints to deform them. This functionality is available thanks to Houdini's new procedural rigging tools. I parented the new joints to the existing keyframed joints (imported from Maya) and they moved with the bird by default, but they didn't rotate correctly. Problems are part of every 3D project, but this problem had me stumped for days and felt like a real roadblock. I had to learn the specific functions required (including VEX matrix functions) but I eventually found a solution.Wandering Albatross - procedural joints moving and rotating

Reflecting now, I realise that I was able to solve this problem because of my intuitive understanding of interpolation. Found in all areas of computing, interpolation means producing new values based on existing values. In this case, I needed new joint rotations based on existing keyframed rotations.3D animators rely on Interpolation all the time, and in a wide variety of contexts including keyframe animation and mesh subdivision, i.e. modelling. At the level of the interface these processes are entirely different, but as data manipulation they are very similar. Interpolation is one of a handful of basic principles ubiquitous in 3D. A full overview of this and other principles is for another paper. Today I want to emphasise that 3D as data manipulation, encourages an intuitive understanding of core 3D principles which are key to problem solving.

Wandering Albatross - flutter test

After generating procedural feather geometry and joints, I noticed an opportunity to make the feathers flutter, as if they are blowing in the wind. The movie below shows an initial test for a flutter system. Basically, points are rotated using a sine function with the amplitude and offset altered along the length of the joint chain.Wandering Albatross - procedural flutter animation

The movie below shows the flutter integrated into the bird. Here I control the flutter manually, but it could be automatic. For example, the amplitude could be driven by the velocity (or speed) of the bird and/or the angular velocity of the feather. Note how procedural systems can start simple and get increasingly complex.Wandering Albatross - complexified with procedural modelling, texturing, rigging and animation

Here is my complexified albatross.

Easily make changes and see them in context - Having created this hybrid procedural bird, I can now make changes to the feather geometry and the textures, quite easily. And I can see the changes in the context of different poses. This is particularly useful for birds because textures need to work for wings outstretched and folded.

Create complex geometry, textures, and movement - As well as easy changes, proceduralism has enabled me to create geometry, textures and movement that would be difficult to create manually. For example, my first attempt at texturing the bird was in Substance Painter where I struggled to paint a plumage map by hand.

Transfer geometry attributes - Now that I have detailed geometry, I can transfer the attributes from this mesh to the original low resolution (simple) bird. This is useful for birds seen in the distance.

3D animator, Zak Katara, describes Proceduralism as "A live system which allows for an asset to be changed with ease" (Katara, 2020) . Like many, he notes a distinction between procedural and destructive, linear workflows. In truth, even the orthodox 3D character workflow is procedural to a degree. For example, after seeing the bird in context, I made changes to the skin weights and the keyframe animation.

With an understanding of data flows and dependencies, we can build stable systems that support iterative design improvements. This is something that new 3D users, including students, find difficult.

Recognising 3D as data manipulation is close to my heart as an artist and as a teacher because it helps students understand core 3D principles, as well as the importance (and power) of live, flexible pipelines. In short, with 3D what we see in the viewport is only ever the tip of the iceberg. How something is made - the hidden system or structure that produces it - is powerful and important.

VMI animals - vertex animation textures (VAT)

Data is a fluid medium in the sense that it can be translated into various visual outcomes. .

.

For example, a colour image (like the one above) can drive the position of points on a mesh (like the one below). R,G,B becomes X,Y,Z. This is the basic functionality of Vertex Animation Textures (or VAT), VAT workflows and plugins have been developed by people who understand 3D as data. The same recognition is useful for users who want to implement VAT workflows and explore what it can do.

VAT was developed to offload computation to the graphics card, freeing up the CPU. It is commonly used for crowd scenes, where there are lots of animated individuals. We're using it to represent Royal Penguin colony on Macquarie Island which is the largest in the world. I've found we can also use VAT's to integrate animals with their environment - like in this example of a porpoising penguin.

Conclusion

Now for my 3rd answer and a concluding remark.Why build a virtual version of a real location?

Answer 3. To explore the implications of new technologies.

Why build a virtual version of a real location? To explore the implications of new technologies including their impact on physical environments. The quote below, by artist and writer James Bridle, echoes my own belief that we need more people, and a greater diversity of people, engaging with emerging technologies.

Through experimental practice (or "tinkering") we can gain an awareness or understandings that cant be achieved in any other way.

"I believe it's crucially important for as many of us as possible to be engaged in thinking through the implications of new technologies; and that this process has to include learning about and tinkering with the things ourselves." (Bridle, 2022)

Works Cited

Life Books. (Ed.). (2003). 100 Photographs that changed the world.Bridle, J. (2022). Ways of Being. Animals, Plants, Machines: The search for a Planetary Intelligence (S. and G. Farrar, Ed.).

Katara, Z. (2020). COLONIZING MARS – HOUDINI AND PROCEDURALISM WITH ZAK KATARA. Nice Moves. https://nicemoves.org/pastevent/colonizing-mars/

Morin, R. (2016). Inside the Artificial Universe That Creates Itself. https://www.theatlantic.com/technology/archive/2016/02/artificial-universe-no-mans-sky/463308/